I’m a back-end developer, and a friend of mind who lives primarily in the front-end world has repeatedly enthused to me about node.js. I should try it, he insists, because it’s really cool. Of course I’ll try it, I maintain, whenever I find the time.

Finding the time for pet projects amongst the busy demands of keeping up with my back-end world, my writing, having a social life and the general day-to-day duties of the modern world is always tricky. So it took one of the latter tasks (specifically, cleaning the bathroom) to get me to pick up node.js. To be honest anything would have been preferable to scrubbing out the bath, and this seemed like a more productive use of my time than trawling my way through r/funny.

Making nodes is easy

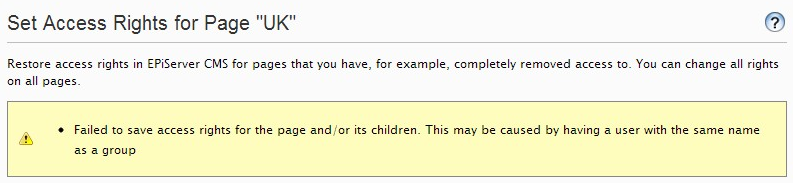

Actually setting node.js up (on Windows) was a doddle. Just run the installer, and you’re done. I opened the node command prompt and tried console.log(‘Hello’). It worked. Next came the trickier part.

What’s it all aBot?

I needed a clear goal for my bot. Something I’ve seen before are bots which watch the twitter stream for particular words, hashtags or mentions and tweet a reply. This seemed like something achievable, so I created a new Twitter account called Coding Sins. The idea is that whenever someone has committed a coding sin, such as not writing a unit test they’d intended to write, or broken the build, they tweet their sin @codingsins, which will then reply with a random message. Mostly it absolves sins, but sometimes not.

A Pea Eye

In order for my bot to be able to monitor the stream and post tweets, it needs access to Twitter’s API. To get this, I needed to create a Twitter Application. The end result of this process is the key, secrets and token required for OAuth login, so that my node.js application can access the API. Registering a Twitter app is very straightforward, although there are a few points worth bearing in mind:

- You need to register it using the same account as your app will be using to tweet. That’s how the two are linked.

- You’ll need read and write permissions to tweet. The default setting is read only, but the option to change permissions is on the tab after the option to create an access token, which can be misleading as the natural impulse is to work through the tabs in order. Change the permissions THEN create the token. A bit of googling revealed I’m far from alone in being tripped up by this. It’s obvious in retrospect, but was confusing at the time.

- Twitter’s settings have quite a high latency. When you change settings, it’s not unusual for them to appear unchanged at first. Give it a few seconds, then refresh.

Look, I thought this was a node.js post. Where’s the node.js stuff, eh?

Fine, we’re now ready to code some node. Before I dive in though (I know, get on with it!) a couple of credits are due:

- The starting point for my code was taken from Sugendran’s simple tweet bot. I modified it for my purposes.

- Sugendran’s code uses Tuiter, which is a node module that exposes the Twitter API. I found it excellent as it allowed me to simply consult the Twitter API’s documentation for implementation.

Anyway, here’s the whole Twitterbot node.js script:

var conf = {

keys: {

consumer_key: 'xxxx',

consumer_secret: 'xxxx',

access_token_key: 'xxxx',

access_token_secret: 'xxxx'

},

terms: ['@codingsins']

};

// We're using the tuiter node module to access the twitter API

var tu = require('tuiter')(conf.keys);

// This is called after attempting to tweet.

// If it fails there isn't much we can do apart from log it to the console for debugging

function onTweeted(err) {

console.log('tu.update complete')

if(err) {

console.error("tweeting failed");

console.error(err);

}

}

// This is called when a matching tweet is found in the stream

function onTweet(tweet) {

console.log("Replying to this tweet: " + tweet.text);

console.log("Screen name: " + tweet.user.screen_name);

// Note we're using the id_str property since javascript is not accurate for 64 bit integers

tu.update({

status: '@' + tweet.user.screen_name + ' ' + getRandomMessage(),

in_reply_to_status_id: tweet.id_str

}, onTweeted);

console.log('tu.update called');

}

// This listens to a twitter stream with the filter that is in the config

tu.filter({

track: conf.terms

}, function(stream) {

console.log("listening to stream");

stream.on('tweet', onTweet);

});

// This contains our collection of messages and selects one at random

function getRandomMessage() {

var messages = new Array(

'Message 1.',

'Message 2.',

'Etc'

)

return messages[Math.floor(Math.random()*messages.length)];

}

I’ve xxxx’d out the OAuth config as that needs to be kept a secret between my script, my twitter application and myself. Also, in the interests of keeping some mystery about the bot, I’ve not included the actual messages it tweets.

The two main points of interest here are:

- The tu.filter call. This uses Twitter’ streaming API to give a stream of tweets filtered by whatever was defined in the config, which in this case is @codingsins. Note that when filtering the stream, everything is essentially text. Filtering for screen names is just the same as filtering for, say, hash tags, or indeed anything else.

- The onTweet function. This is called in response to any tweets appearing in the filtered stream. It uses Twitter’s RESTful API to tweet a random message in reply.

Before running the script, the tuiter module needs installing. Handily, node.js comes with an incredibly easy package manager. All that needs doing is to fire up the command prompt and enter:

npm install tuiter

It’ll output a bunch of lines summarising what it’s doing and then we’re good to go.

Running the script is simply a matter of saving it as a .js file (eg codingsins.js) and starting it from the command line like this:

node codingsins.js

That works fine in Linuxland, but in windows you’ll need to type node.exe instead of node, or it’ll shit the bed. Or at least fail with an error message.

The icing on the node.js cake is keeping the application running through crashes and restarts. There are a few tools out there to achieve this, but I used Forever, mainly because it was already installed on the server I used to host the application. Getting it up and running, er, forever was simply a matter of SSHing into the server and entering this:

forever start codingsins.js

Confess

That’s it! All in all I was impressed with how relatively easy this was to accomplish. From a half-hearted start born of avoiding cleaning the bathroom on a Saturday afternoon, it took me until around nine o’clock to have a working node.js Twitterbot.

If you want to give it a try, tweet your coding sin @codingsins and await your judgement.

Here’s a mouse riding a node toad: